Using Docker¶

Docker is a very useful tool to manage different projects in different CUDA environments.

Note

Before you use docker, you should at least know the basics of command-line interface (CLI). Please check out our linux_tutorial if you want to learn more about CLI.

Docker Containers¶

|

|

Docker Containers (Apps) |

Virtual Machines |

Code for port mapping of workstation & container¶

Description: create container with port mapping to workstation

nvidia-docker run -it -v /data/[user_name]:/workspace/[user_name] --shm-size=128gb

-v /etc/timezone:/etc/timezone:ro

-v /etc/localtime:/etc/localtime:ro -p 100XX-100XX:100XX-100XX

--name [container_name] pytorch/pytorch:1.9.0-cuda11.1-cudnn8-devel

Docker Images¶

Description: check the list of stored image

docker images

Note

For the docker image, you can check via Docker Hub

Useful code in docker¶

Check GPU status¶

nvidia-smi

List out active container¶

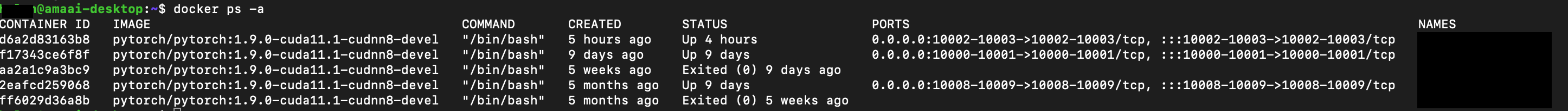

docker ps

Enter container¶

docker exec -it [container name] bash

Note

If your container is in non-active status, you need to start container before you can enter the container. You can use ‘docker ps -a’ to check your container status.

docker start [container_name]

Exit container¶

exit

Open jupyter notebook in workstation¶

jupyter notebook --ip=0.0.0.0 --port=[100XX] --allow-root --no-browser

Note

Browser to jupyter with port number: http://localhost:100XX/

Monitor GPU running progress¶

Description: can customize the updated timeframe for GPU. For example, request updated in every 0.2s.

watch -d -n 0.2 nvidia-smi